Editor’s note: the following is a guest article from Deepak Kumar, the founder and CEO of Adaptiva.

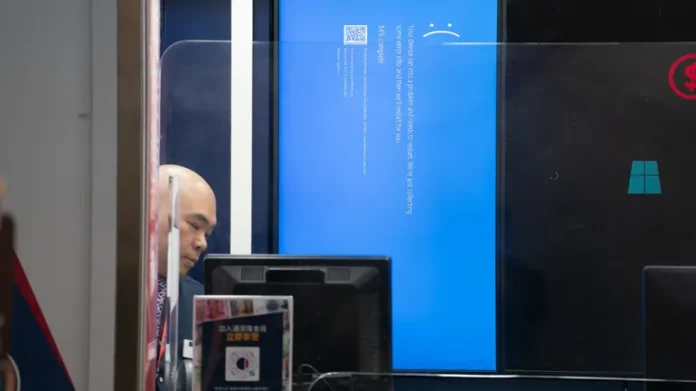

If the widespread technology outages this past month tied to a faulty CrowdStrike update highlighted anything, it is that business systems are so interconnected that a single errant patch file can disrupt normal operations for organizations and their stakeholders worldwide.

This may be obvious to some, but it is alarming to others.

Patching vulnerabilities is crucial for protecting computer networks, but the speed at which updates are issued has come into question. When it comes to patching there have historically been two approaches: manual and automated.

In light of recent events, there is debate about organizations shifting away from automated patching due to concerns about outages, in favor of slower, manual updates, which are perceived as safer. Respectfully, this instinct is wholly incorrect.

Slowing down the patching process is a reflexive action and puts an organization directly at risk for a cyberattack. The risk of patching manually — a slow, laborious process for IT teams — is far greater than the risk of patching too aggressively.

With manual patching, IT administrators face the daunting and impractical task of identifying the correct patch, researching it (often through lengthy reports), and completing numerous other tasks to ensure safe company-wide deployment.

It’s an uphill battle for IT teams that results in many unaddressed vulnerabilities, which creates an environment ripe for exploitation by cybercriminals and nation-state actors. While concerns about outages and disruptions are valid, relying on manual, reactive patching could be a catastrophic mistake.

Organizations should continue to leverage the best autonomous patching solution they can afford, and embrace the speed and scale that comes with it, but with a crucial caveat: there must be guardrails in place to prevent negative enterprisewide impact when something goes wrong.

Automated patching limits attack surface

With 90% of cyberattacks starting at the endpoint, unpatched devices remain one of the greatest risks to an organization. Cybercriminals are continually searching for companies with software vulnerabilities, as it facilitates their disruptive goals.

Through unpatched software, bad actors can breach systems, steal data, and cause chaos, forcing organizations to either pay a ransom or risk exposing customer and employee data.

It is a race against time, and with manual processes, organizations are at a significant disadvantage.

There were 26,447 vulnerabilities disclosed last year and bad actors exploited 75% of vulnerabilities within 19 days. One in four high-risk vulnerabilities were exploited the same day they were disclosed.

The number of attack surfaces organizations have to protect far outnumber what employees can handle manually, and that’s why automation is a must. While automation might make some leaders feel they lack control, manual patching is not a feasible option for ensuring organizational safety. Automation, with the necessary controls, is essential.

Controls give leaders peace of mind

Today’s IT environments are complex. There are thousands of applications and often multiple operating systems and drivers that every organization has to maintain and patch continuously. When leaders have more jurisdiction over the process, the possibility for catastrophic failure dwindles. What does that look like?

- Deploy the first wave of patches fast to a group of non-critical machines that represent a broad cross-section of your environment.

- Wait for those patches to be validated as safe. After all, bad patches get released all the time.

- Allow for human approval.

- Proceed to the next wave.

- Organizations should completely automate the above flow, so that it is repeatable at scale.

This approach can help contain and minimize risk and disruption. If all goes according to plan, patching in waves will go beyond the simple, static nature of a phased deployment mechanism to a more dynamic one that adjusts automatically to the changing landscape of an IT environment.

As machines get updated, replaced or their configurations change, the waves adapt in real time, ensuring that each deployment phase is always optimally configured. And, this needs to be stated clearly: even with a fully automated solution, humans should define strategy and process, and software should do the rest.

Phased deployment waves is only one lesson we have learned from the CrowdStrike outage. The need for IT leaders to have greater controls over patching and software updates to protect their organizations is also apparent.

If any aspect starts going poorly, system administrators must be able to access controls to pause and roll back the patch. This capability gives IT and security leaders confidence in relying on automated patching, as they can intervene at the first sign of trouble to mitigate damage and quickly recover.

The events of the past month have been unsettling, but it is crucial for organizations to make decisions based on sound logic rather than fear. We have seen the damage caused by cyberattacks, and now we have witnessed the complications that arise when mistakes happen and a patch breaks things.

If there’s a lesson business leaders take from this, it is to prioritize measures that help them avoid either scenario.

Patching remains a top priority for every organization, but slow, manual,and reactive patching presents far more risk than benefit. Automated patching without the capability to pause, cancel, or roll back can be reckless and lead to disruptions or worse.

Automated patching, with the necessary controls, is undoubtedly the best path forward, offering the speed needed to thwart bad actors and the control required to prevent an errant update from causing widespread issues.

Source link