Business email compromises, which supplanted ransomware last year to become the top financially motivated attack vector-threatening organizations, are likely to become harder to track. New investigations by Abnormal Security suggest attackers are using generative AI to create phishing emails, including vendor impersonation attacks of the kind Abnormal flagged earlier this year by the actor dubbed Firebrick Ostricth.

According to Abnormal, by using ChatGPT and other large language models, attackers are able to craft social engineering missives that aren’t festooned with such red flags as formatting issues, atypical syntax, incorrect grammar, punctuation, spelling and email addresses.

The firm used its own AI models to determine that certain emails sent to its customers later identified as phishing attacks were probably AI-generated, according to Dan Shiebler, head of machine learning at Abnormal. “While we are still doing a complete analysis to understand the extent of AI-generated email attacks, Abnormal has seen a definite increase in the number of attacks with AI indicators as a percentage of all attacks, particularly over the past few weeks,” he said.

Jump to:

Using faux Facebook violations as lure

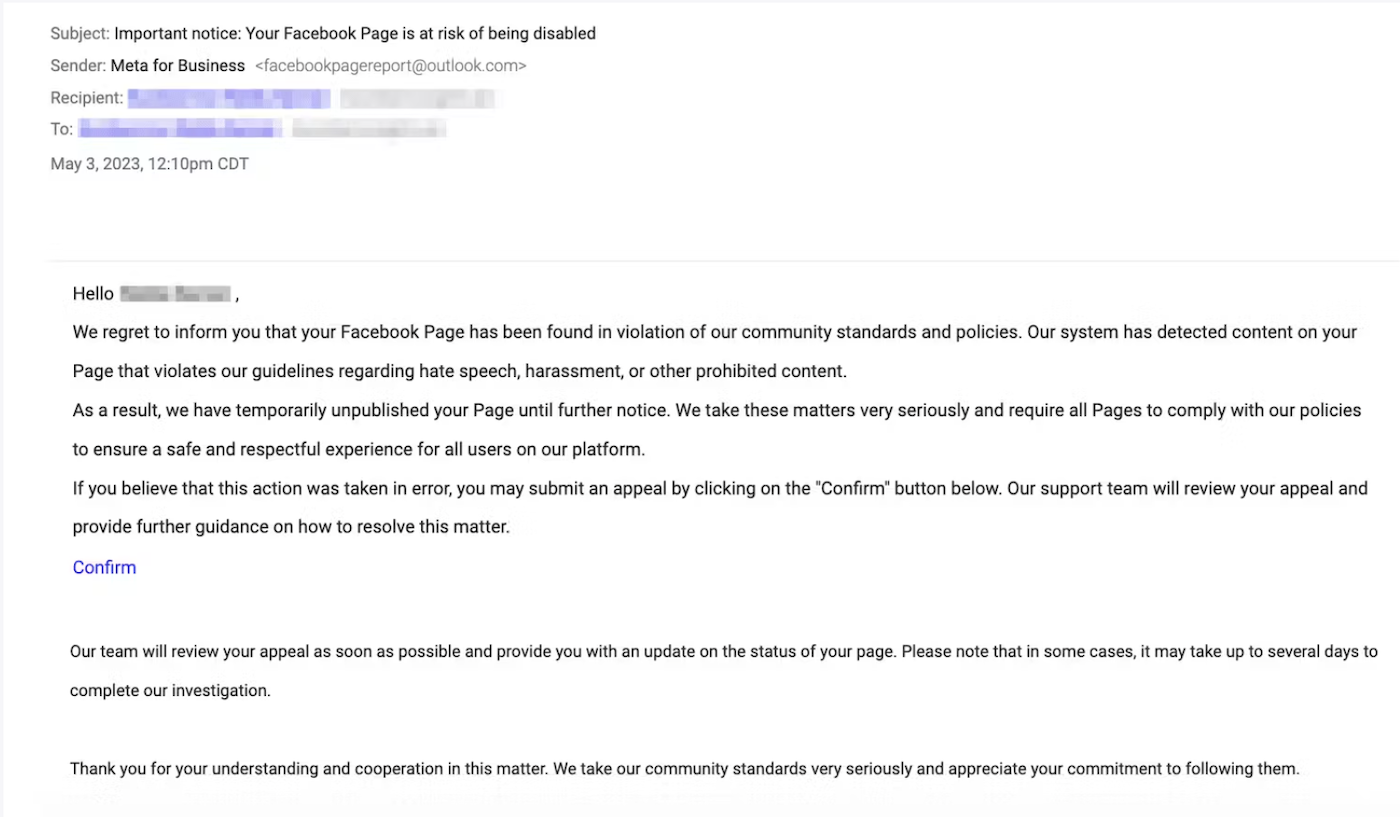

A new tactic noted by Abnormal involves spoofing official Facebook notifications informing the target that they are “in violation of community standards” and that their page has been unpublished. The user is then asked to click on a link and file an appeal, which leads to a phishing page to harvest user credentials, giving attackers access to the target’s Facebook Page, or to sell on the dark web (Figure A).

Figure A

Shiebler said the fact that the text within the Facebook spoofs is nearly identical to the language expected from Meta for Business suggests that less sophisticated attackers will be able to easily avoid the usual phishing pitfalls.

“The danger of generative AI in email attacks is that it allows threat actors to write increasingly sophisticated content, making it more likely that their target will be deceived into clicking a link or following their instructions,” he said, adding that AI can also be used to create greater personalization.

“Imagine if threat actors were to input snippets of their victim’s email history or LinkedIn profile content within their ChatGPT queries. Emails will begin to show the typical context, language, and tone the victim expects, making BEC emails even more deceptive,” he said.

Looks like a phish but may be a dolphin

According to Abnormal, another complication in detecting phishing exploits that used AI to craft emails involves false positive findings. Because many legitimate emails are built from templates using common phrases, they can be flagged by AI because of their similarity to what an AI model would also generate, noted Shiebler who said analyses do give some indication that an email may have been created by AI, “And we use that signal (among thousands of others) to determine malicious intent.”

AI-generated vendor compromise, invoice fraud

Abnormal found instances of business email compromises built by generative AI to impersonate vendors, containing invoices requesting payment to an illegitimate payment portal.

In one case that Abnormal flagged, attackers impersonated an employee’s account at the target company and used it to send a fake email to the payroll department to update the direct deposit information on file.

Shiebler noted that, unlike traditional BEC attacks, AI-generated BEC salvos are written professionally. “They are written with a sense of formality that would be expected around a business matter,” he said. “The impersonated attorney is also from a real-life law firm—a detail that gives the email an even greater sense of legitimacy and makes it more likely to deceive its victim,” he added.

Takes one to know one: Using AI to catch AI

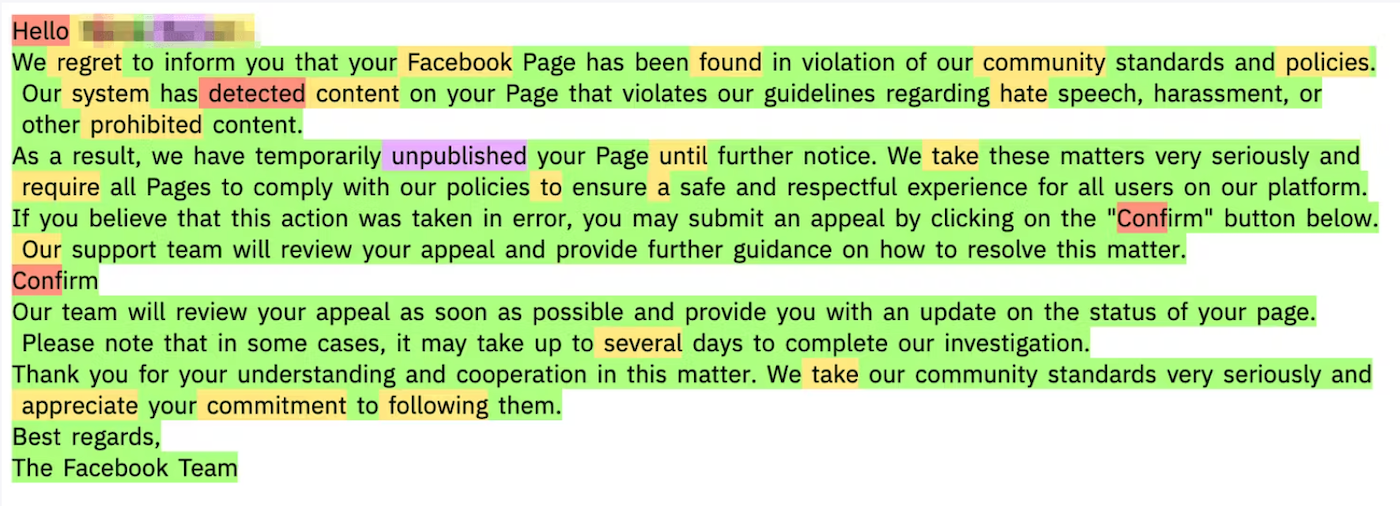

Shiebler said that detecting AI authorship involves a mirror operation: running LLM-generated email texts through an AI prediction engine to analyze how likely it is that an AI system will select each word in an email.

Abnormal used open-source large language models to analyze the probability that each word in an email can be predicted given the context to the left of the word. “If the words in the email have consistently high likelihood (meaning each term is highly aligned with what an AI model would say, more so than in human text), then we classify the email as possibly written by AI,” he said. (Figure B).

Figure B

Shiebler warned that because there are many legitimate use cases where employees use AI to create email content, it is not pragmatic to block all AI-generated emails on suspicion of malice. “As such, the fact that an email has AI indicators must be used alongside many other signals to indicate malicious intent,” he said, adding that the firm does further validation via such AI detection tools as OpenAI Detector and GPTZero.

“Legitimate emails can look AI-generated, such as templatized messages and machine translations, making catching legitimate AI-generated emails difficult. When our system decides whether to block an email, it incorporates much information beyond whether AI may have generated the email using identity, behavior, and related indicators.”

How to combat AI phishing attacks

Abnormal’s report suggested organizations implement AI-based solutions that can detect highly sophisticated AI-generated attacks that are nearly impossible to distinguish from legitimate emails. They must also see when an AI-generated email is legitimate versus when it has malicious intent.

“Think of it as good AI to fight bad AI,” said the report. The firm said that the best AI-driven tools are able to baseline normal behavior across the email environment — including typical user-specific communication patterns, styles, and relationships versus just looking for typical (and protean) compromise indicators. Because of that, they can detect the anomalies that may indicate a potential attack, no matter if the anomalies were created by a human or AI.

“Organizations should also practice good cybersecurity hygiene, including implementing continuous security awareness training to ensure employees are vigilant about BEC risks,” said Sheibler. “Additionally, implementing tactics like password management and multi-factor authentication will ensure the organization can limit further damage if any attack succeeds.”

Source link